Commissioning Custom Test Equipment

Steps to take and Gotchas to avoid

- Main to do items before starting commissioning

- Commissioning process steps

- Gotchas and tips to mitigate risk for each step

Main to do items prior to starting commissioning

There’s some work to be done to be ready to accept new custom test equipment onto the manufacturing floor. See if you’re ready by holding a pre-commissioning review meeting.

Commissioning process steps for custom test equipment

The commissioning process for custom test equipment generally follows these seven steps:

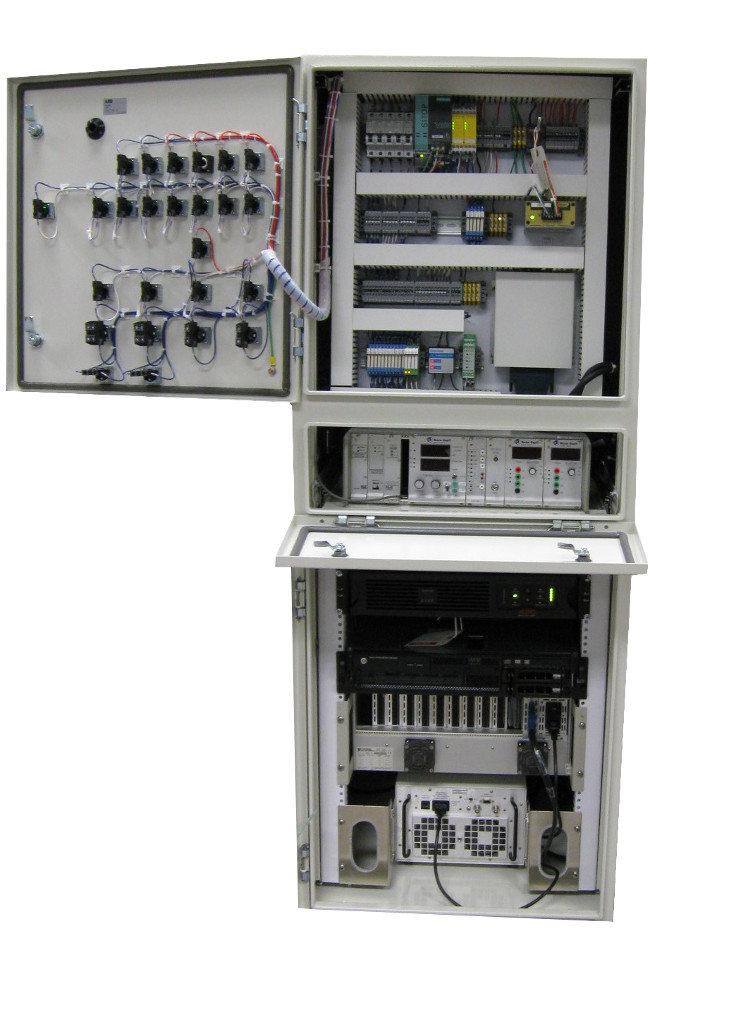

- Put the test equipment in place and connect to utilities (g., power, ethernet, shop air)

- Connect the sensors, actuators and comm to the acquisition & control equipment

- Verify connections

- Calibrate the system if needed

- Dry Run – Run thru a test, first manually, then automatically

- Execute for final acceptance

- Deliver final docs

Gotchas and tips to mitigate risk for each step of the commissioning process

| Step: | Put the test equipment in place and connect to utilities (e.g., power, ethernet, shop air) |

|---|---|

| Gotcha: | The IT group can be a bottleneck (nothing against IT for sure; they’re just doing their job) when it comes to getting an Ethernet drop and allowing the PC to live on the company’s internal network. |

| Mitigation: | Get IT engaged early and often. Also, get the test PC on the network early on (before the test system is completed) to make sure you can see it on the network. Sometimes it’s not necessary to be on the main company network, so consider getting on one of your secondary networks when possible; you may save yourself some pain. |

| Step: | Connect the sensors, actuators and comm to the acquisition & control equipment |

|---|---|

| Gotcha: | Noise – Lack of proper shielding of cables in an electrically noisy environment. |

| Mitigation: | Design for differential signaling and shielded cabling if suspected need. Make sure you’re making the right measurements. If you’re not taking the right measurements, what’s the point? |

| Gotcha: | If you’re migrating an existing but obsolete tester, you’ll want to watch out for field wiring. Changes in location of specific connection points on the test equipment may be in a different physical location on the new test system (e.g., the old wiring is now too short). Also, the old field wiring may be too large to fit into the screw terminals on the new tester. |

| Mitigation: | Check for field wiring challenges early on during the development of the test system so that there’s time to make mods before commissioning. |

| Step: | Verify connections |

|---|---|

| Gotcha: | The UUT is changed while the test system is being developed, requiring tester pinout modifications. |

| Mitigation: | Don’t forget to check with the product design team at critical phases of the test system development. |

| Gotcha: | A UUT and/or test equipment is more likely to be damaged on first connection. |

| Mitigation: | If the UUT is expensive or you’re worried about breaking it for whatever reason, consider creating a simulated UUT to connect to and validate with before connecting a real UUT. |

| Step: | Calibrate the system if needed |

|---|---|

| Gotcha: | Personnel availability. You don’t want to be sitting there ready to rock and hear “Bob knows how to calibrate this thing, but he’s not in today or tomorrow.”. |

| Mitigation: | Plan ahead. Capture the names of critical personal and understand their availability when scheduling commissioning. You can’t account for every scenario, and you may have to move forward at risk, but at least expectations will be set appropriately, which can reduce stress significantly. |

| Gotcha: | If component-level cal is desired, make sure you understand the level of calibration desired: (1) Calibrated with no documentation (2) Calibrated with documentation (3) Calibrated with cal data |

| Mitigation: | Discuss and capture calibration level requirements during the requirements gathering phase of the custom test equipment. |

| Gotcha: | Some sensors (e.g. load sensors) can be challenging to apply traceable conditions to. |

| Mitigation: | If you’re planning end-to-end calibration, make sure to have a plan in place for how to apply traceable conditions for each I/O and sensor. |

| Gotcha: | Sometimes custom test equipment takes so long to develop that the component level cal only has a couple months remaining before it’s out of date. |

| Mitigation: | Be prepared to run a cal soon after commissioning. |

| Step: | Dry Run – Run thru a test, first manually, then automatically |

|---|---|

| Gotcha: | Running tests automatically out of the gate is swinging for the fences. It can work out, but rarely does, and can cause confusion or, worse, damage to the UUT or test equipment. |

| Mitigation: | Start slowly, stepping through manually to verify basic test functionality. Slowly increase the automation and remove temporary software delays to really flush out various issues that can arise from long runs at full rate like overheating and synchronization issues. |

| Step: | Execute for final acceptance. |

|---|---|

| Gotcha: | Letting the test equipment developer execute the ATP may be a bad idea: it’s better to have someone else testing their creation. |

| Mitigation: | An end-user of the test system should generally execute the acceptance tests, who should be a different person than the person that developed the test system. |

| Gotcha: | Stress testing often gets short-changed, mainly because it’s hard and time consuming. |

| Mitigation: | If the software needs to run tests for 1000 hours without problems, that needs to be tested. Running tests while under a simulated CPU load (with tools like https://www.jam-software.com/heavyload/) might help accelerate the discovery of hard to reproduce bugs. |

| Gotcha: | Although it’s reasonably common not to have an ATP, this is ill-advised. Otherwise, the test system may be delivered, “accepted”, and then 3 months later: “New product doesn’t pass the test”, “We’re not seeing what we expect to see”, and “I don’t understand what I’m seeing here”. |

| Mitigation: | Plan to create an ATP document, however informal (check out this article on How to Develop a Good Acceptance Test). |

| Gotcha: | Not checking the various failure modes of both the UUT and the test equipment. |

| Mitigation: | Here are some scenarios to think about:

|

| Step: | Deliver final docs |

|---|---|

| Gotcha: | Often times there’s a mismatch of expectations between the user of the test system and the provider of the test equipment. The user often expected to see more details and more refined documentation. |

| Mitigation: | Discuss during requirements gathering if you have particular expectations about what this documentation ought to look like. |

If you only walk away with three takeaways on commissioning custom test equipment, remember these:

- Hold a pre-commissioning review meeting to coordinate activities

- Discuss expectations about documentation at the beginning of the test system development process

- Create an ATP

Next Steps

If you need new custom test equipment and you have a commissioning question or you’d like us to develop the test system, reach out here to start the conversation.

If you’re in learning mode, here are some additional resources you might be interested in:

- 9 Considerations Before you Outsource your Custom Test Equipment Development

- Custom Test Systems – Problems we solve

- Custom Manufacturing Test Equipment for testing in production | End of Line Test Equipment

- LabVIEW Test Automation – Custom Automated Test System Buyers Guide

- Hardware Product Testing Strategy – for complex or mission-critical parts & systems

- How to Diagnose Failing Custom Test Equipment – process, causes, getting started

- NI Hardware Compatibility – real-world tips and lessons learned

- Hardware Test Plan – for complex or mission-critical products

- How to deal with LabVIEW Spaghetti Code

- How to prepare for when your test team starts to retire

- Practical manufacturing test and assembly improvements with I4.0 digitalization

- What to do with your manufacturing test data after you collect it

- 5 Keys to Upgrading Obsolete Manufacturing Test Systems

- How Aerospace and Defense Manufacturers Can Make the Assembly and Test Process a Competitive Advantage

- Reduce Manufacturing Costs Report

- Improving Manufacturing Test Stations – Test Systems as Lean Manufacturing Enablers To Reduce Errors & Waste