Tips for improving manufacturing test with advanced test reports

This article covers four stages in reporting product test data during manufacturing:

- collection,

- analysis,

- management,

- presentation.

While there are several types of testing that can be performed on products, the focus of this article will be manufacturing test systems that incorporate test-only or combined assembly and test processes, where each manufactured product is measured against some functional requirements (if you’re interested in the product validation side, check out our article on advanced test reports for product validation).

Why it matters – here’s what you get out of this article

To make improvements in manufacturing, measurements and data should form the basis of most decisions.

Lean and Six Sigma tools and methods have helped manufacturers for many years in the pursuit of reducing waste, increasing efficiency, and improving product quality in production. When applied to test systems, advanced reports boost these goals by:

- Enabling more people to access the data via shared network and/or cloud storage. This eliminates data silos, which typically occur when test results are stored only on the test system.

- Including metadata for the test configuration, operational usage, and data summaries for each part tested to give context to the test results.

- Using a database to store test data and associated metadata to simplify finding information.

- Utilizing modern analysis and presentation tools.

In a nutshell, the goal is to reduce the friction in organizing, handling, and presenting of the test data so that the people who need to make decisions can make better choices. Some examples are:

- Noticing that one assembly and test operator for a particular product is slightly faster than others. Discussions with that operator expose that the reasons for the speed are due to the way the torque tool is handled. The other operators change their method to match, and overall throughput is increased.

- One of the test steps for a certain product shows an abrupt change over time in one of the step’s measurements. None of the operational metadata is significantly different so the manufacturing engineer digs in and finds that one of the suppliers for a subassembly was changed. Conversations with that new supplier show that they are using a different heatsink than the prior supplier. The new supplier changes the production of their subassembly.

- The test time allotted for a certain test step was chosen long ago to be long enough to let the unit under test come up to a stable operating pressure. The raw data of pressure vs time collected by the test system is analyzed to show that this test time can be significantly shortened for all but some worst-case parts. The test step is modified to stop the test based on pressure stabilization so that most parts pass more quickly, increasing unit production rates.

The remainder of this article is structured with the next section describing how test data is often handled by many manufacturers and the subsequent sections offering details on ways to make better use of the test data.

Manufacturing collection and analysis approach

A typical approach for manufacturing test (and assembly and test) is to design the test procedure to execute as quickly as possible to keep production at a high rate. The test data collection is usually focused on a small set of “critical-to-function” parameters which are measured as quickly as reasonable. These parameters are used to decide if the part or subassembly unit is ready to be passed along to the rest of the manufacturing process on its way to the customer.

The traditional approach often consists of:

- Quickly computing the overall pass/fail disposition of a unit, based on comparison against upper and lower limits,

- Archiving only summary pass/fail data,

- Treating each unit as an anonymous entity with no need for data aggregation or unique unit ID,

- Ignoring the details about any assembly work done before or during the testing, and

- Ignoring the details about the operational conditions during testing.

Our experience shows that some or all of the raw test data are typically not archived, unless perhaps temporarily for failed parts where you want to know the cause of the failure, or when regulations require access to historical data.

Also, details about how the part was assembled are usually not captured even though the assembly steps will almost always affect the unit’s performance measurement results. For example:

- the torque applied to bolts,

- the tension of motor windings,

- the temperature of the adhesive,

- and the alignment of shafts

will affect product performance to some degree.

Furthermore, traditional analysis of manufacturing data is often minimal, such as producing simple stats like first pass yield and production rate for use during daily or weekly production meetings, or presented on a real-time dashboard in the style of an Andon board, visible by manufacturing engineers and managers.

Finally, manufacturing data is often siloed, leading to a lack of sharing with MES/ERP platforms, product designers, and manufacturing engineers. Sharing provides a feedback loop between a) the performance of the product and the operators of the assembly and test stations and b) the rest of the production process, as describe below.

Data management and presentation approaches

Advanced reporting utilizes additional data management and presentation approaches. These approaches leverage databases for simplifying data access, and analysis tools that were either unavailable or underused prior to ~2015.

Advanced reporting uses these steps for manufacturing testing:

- Store data in a database to enable searching, sorting, and filtering. If datasets are large, store dataset metadata in this database such as statistical metrics, acquisition configuration, file location, and so on, to enable some database operations without having to pull a large dataset into memory in order to locate pertinent information.

- Include test system configuration, operational, and measurement metadata information about the test in a database, in addition to the usual raw measurements on the unit under test.

- Utilize modern analysis and graphing tools such as PowerBI, Python libraries, and Azure Machine Learning.

- Reduce the steps and friction to access reports so everyone can review and react with feedback.

Some additional tips for extracting the most benefit from these steps are describe next.

Using a database to store test data

Advanced reporting should have access to data captured about the operation of the assembly and test station, i.e., the metadata, as well as raw test data. Having both meta and raw data can often answer questions and yield insights about how to improve quality and operational efficiency. We recommend placing all this information in a database, especially since the hardware needed for this data storage is increasingly inexpensive.

There are at least two important reasons to put your test results in a database:

- Database tools are ideal for improving the efficiency of searching, sorting, and filtering operations.

- By including dataset and operational metadata, you can organize any large raw datasets, such as waveforms, and process details into information that can be searched, sorted, and filtered.

For example, the database holds, at a minimum, values for parameters that might affect the outcome of the test, such as:

- operational info such as:

- the time of the test,

- which operator performed the work,

- which station was used,

- data from any machines used during assembly,

- environmental conditions at the time of test,

- unique part ID,

- sensor and associated calibration information,

- setpoint values,

- various metrics on any dynamic data such as min, max, average, primary frequency components, etc. to make raw data more easily searched,

- links to the location of the large raw datasets and any “free form” information which might not fit into a table with fixed fields,

- and of course the measurements made on each unit under test.

With these elements in the database, you can find all the test data you need for your report.

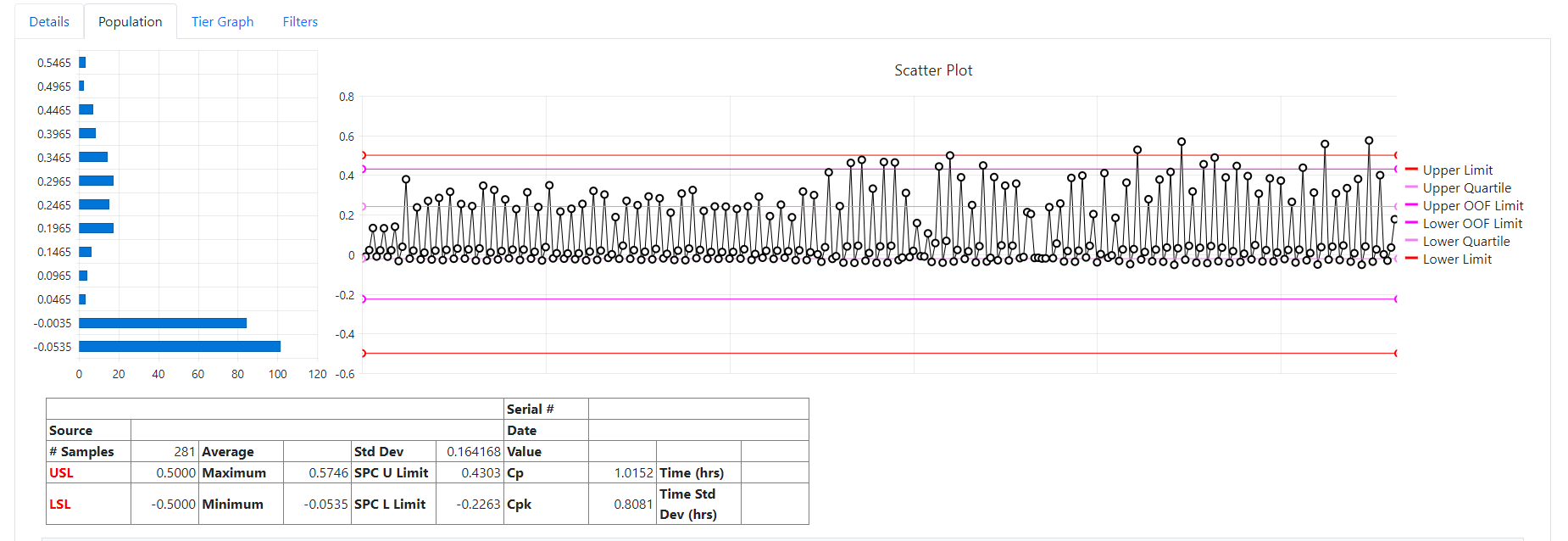

With this information in the database, reports can include statistical trends over time across units, as well as details of outliers (such as units that failed).

Storing product test results

By combining the test results for each unit with the operational information and other data in the database, it’s straightforward to make comparisons between units for tracking drifts in quality and correlate those drifts with the other data in the database. For example, you might find that some test result drifts toward an upper limit the longer the time since calibration for a certain test instrument.

Furthermore, operational and environmental information associated with each assembly and test step for each unit provides feedback on the assembly and test process efficiency metrics. Some examples:

- Takt time for a given assembly and test station and a given operator at a specific time

- Machine operation time to assemble and test the unit

- Comments by the operator and/or a design or manufacturing engineer to log concerns or observations. These qualitative data may highlight some deficiency in the upstream manufacturing process or supplier issues.

Making these associations is full of friction if access to data about the unit under test and how it was tested is not straightforward.

Analysis and display tools

AI (artificial intelligence) and ML (machine learning) algorithms have enabled tools and platforms that help anyone handling test data. A few worthy potentials:

- PowerBI

- Free and paid analysis app, libraries and services offered under popular programming platforms such as C#, Python, R, MATLAB, Julia, and so on. These all offer ways to pull data from SQL databases.

- Low-code and No-code apps for ML such as Microsoft Azure ML Service, Google AutoML, Amazon SageMaker, and so on.

Exposing and sharing production test data is a neglected facet of test. We’ve seen major boosts in areas of production that utilize the feedback loop between the test data and manufacturing operations as a Lean process enabler to reduce waste in what is often termed the hidden factory.

Making the report accessible

If a report is not easily accessible, not to mention easily digestible, then few will read it.

It’s especially important that management understands the fundamentals of the report and are made aware of how the test created value by reducing cost and/or schedule and perhaps found some result that points to a satisfactory/unsatisfactory design.

Management’s understanding will aid in their willingness to fund future projects aimed at enhancing the use of test data throughout the company.

Consider these suggestions for improving the availability, comprehension, and value of test data:

- Post the test report to internal posting apps, such as on SharePoint pages, Teams channels, or Slack channels.

- Create a summary of the report as a web page with links to additional detailed content.

- Create graphs and charts to visualize results so that they’re easier to digest.

- Highlight a few of the most valuable aspects of the test in a summary at the beginning of the report. Executive summaries are important for the entire engineering team, not just executives.

Besides posting the report where people can easily get to it, the best method to improve accessibility is to follow some rules on writing a good engineering report.

Search the web for ‘make “engineering” reports more engaging’ and you’ll find many excellent suggestions, many from university departments that need to make their researchers better at promoting their work in order to increase funding – a goal that test engineers of all types can aspire to. Some examples:

- https://owl.purdue.edu/owl/subject_specific_writing/writing_in_engineering/writing_engineering_reports.html

- https://www.sussex.ac.uk/ei/internal/forstudents/engineeringdesign/studyguides/labwriting

- https://venngage.com/blog/consulting-report-template/ (this one mainly shows possible style improvements for readability)

Next Steps

Want help creating advanced test reports? Reach out for a chat.