Tips for improving design validation with advanced test reports

This article covers four stages in reporting product test data:

- collection,

- analysis,

- management,

- presentation.

While there are several types of testing that can be performed on products, the focus of this article will be on testing performed during design validation (if you’re interested in the manufacturing test side, check out our article on advanced test reports for manufacturing test).

Why are advanced test reports important?

Configuring and setting up a test for validation of a product’s design is time-consuming and sometimes very expensive (e.g. think about the costs associated with running a test in a wind tunnel!).

Running the test can also be a significant commitment in test facility usage, time spent on the test, and personnel involved in executing and/or monitoring the test. And, yet after the test data has been collected, many people have difficulty managing and presenting the datasets, especially across multiple runs of various conditions and design versions.

The goal with advanced test reports is to make it easier for product design engineers and product managers to make decisions based on this expensive test data.

Advanced test reports can help enhance product design outcomes by:

- Locating similarities between one design and others.

- Identifying performance effects due to each condition variable or set of variables.

- Disseminating information and updates in a timely manner to all decision makers.

This article discusses ways to improve the organization, handling, and presentation of the data.

For example:

- Avoid putting the data into a silo. Use shared network and/or cloud storage.

- Create metadata that includes information on the test configuration, operational aspects, and test data summary metrics.

- Store the data and metadata in a database to simplify finding information.

- Utilize modern analysis and presentation tools.

We’ll dive into the reasons behind these examples below.

Design validation collection and analysis approach

The typical approach to the data collection stage in design validation testing is “gotta collect it all”.

This makes sense because the test is being done to find out what you don’t yet know about. Plus, oftentimes, the setup and teardown of a test is expensive, so you want to be sure to measure everything across all ranges of operational and environmental conditions to avoid the cost and time of having to run the test again.

Thus, a lot of data is collected with the intent that you can “figure it out” later when in the data analysis stage. That tactic typically means that data analysis will be one of two types:

- While you have a lot of data to process, you know the algorithms to use and metrics to calculate. It just takes time to inspect (and sometimes clean) and process the data, or

- You’re looking for relationships and trends between inputs and outputs, but don’t yet know which are important. You may still have to inspect and clean the data.

Traditionally, data analysis based on these two scenarios involved dumping test data to a file on a local drive (or perhaps a network drive) and using tools such as Excel or MATLAB.

While these tools are still valid, interactions with databases, data management, and machine learning platforms are worth considering as described below.

The main thrust of this article is to highlight ways to unlock the untapped information (insights) in those data files, which are too often siloed and never accessed or reported.

Management and presentation approaches

Advanced reporting utilizes additional management and presentation approaches. These approaches leverage databases (which simplify data access), and analysis tools (which were either unavailable or underused prior to ~2015).

Advanced reporting leverages these steps:

- Store data in a database to enable searching, sorting, and filtering. If datasets are large, store some metadata in this database about the raw dataset such as statistical metrics, acquisition configuration, file location, and so on, to enable some database operations without having to pull the raw dataset into memory.

- Include configuration, operational, and measurement metadata information about the test in a database.

- Utilize modern analysis and graphing tools such as PowerBI, Python libraries, and Azure machine learning.

- Reduce the friction to access the report results, both summary and details, so everyone can review and react.

Using a database

There are at least two important reasons to put your test results in a database:

- Databases are ideal for improving the efficiency of searching, sorting, and filtering operations.

- By including dataset and operational metadata, you can organize large raw datasets (such as waveforms), and process details into information that can be searched, sorted, and filtered.

For example, you might have a new design for an aircraft component, such as a turbine blade or a fuel pump, and you’ve collected an array of operational setpoints at which performance data is collected, which can include both static measurements (e.g., temperatures, positions, RPM) and dynamic measurements (e.g., waveforms of pressure versus time).

In this example, the database should hold, at a minimum:

- Operational test info (e.g., the time of the test, operator name, test configuration),

- sensor and associated calibration information,

- operational setpoint values,

- static measurements,

- various metrics on any dynamic data such as min, max, average, primary frequency components, and so on,

- and links to the location of the large raw datasets and any “free form” information which might not fit into a table with fixed fields.

With these elements in the database, you can search and sort the test data you need for your report.

Storing test run information

Since the database holds operational test information, and access to raw and free-form data, it’s straightforward to make comparisons between results.

For design validation, the comparison is between two or more designs. The metadata stored in the database from each design’s test permits finding data from prior similar test runs for comparison.

Referencing all test runs in a single database reduces the unfortunate consequence of needing to rerun a test because of lost, inaccessible, or hidden data. Further, searching the database will save time locating relevant test data compared with manual hunting.

As an example, one of our clients, a well-known manufacturer of semi-custom cooling units, had many years of design validation data for numerous system configurations. Whenever a new customer order arrived, they had to manually scour for the raw data, Excel files, and the summary reports for similar configurations to see if one of those previously validated designs would be sufficient for this new order. Days could be spent locating the reports and associated data. With a database containing information such as enumerated above, searches are completed in minutes and reports reviewed almost immediately, resulting in a huge time savings.

Analysis and display tools

AI (artificial intelligence) and ML (machine learning) algorithms have enabled new tools and platforms that can help anyone handling test data. A few worthy potentials:

- PowerBI

- Free and paid analysis apps, libraries and services offered under popular programming platforms such as C#, Python, R, MATLAB, Julia, and so on. All these apps offer ways to pull data from SQL databases as well as offer sophisticated data and signal analysis routines.

- Low-code and No-code apps for ML such as Microsoft Azure ML Service, Google AutoML, Amazon SageMaker, can ease you into using ML.

Making the report accessible and digestible

If a report is not easily accessible and digestible, then few will read it.

It’s especially important that management understand the fundamentals of the report and are made aware of how the test created value by reducing cost and/or schedule and perhaps found some result that points to a satisfactory/unsatisfactory design, which can be fed back to design engineers.

A key point in showing your value as part of the test group: management’s understanding will aid in future funding of test projects and will enhance the use of test data throughout the company.

Consider these suggestions for improving the availability, comprehension, and value of test data:

- Post the test report to internal posting apps, such as on SharePoint pages, Teams channels, or Slack channels.

- Create a summary of the report as a web page with links to additional detailed content.

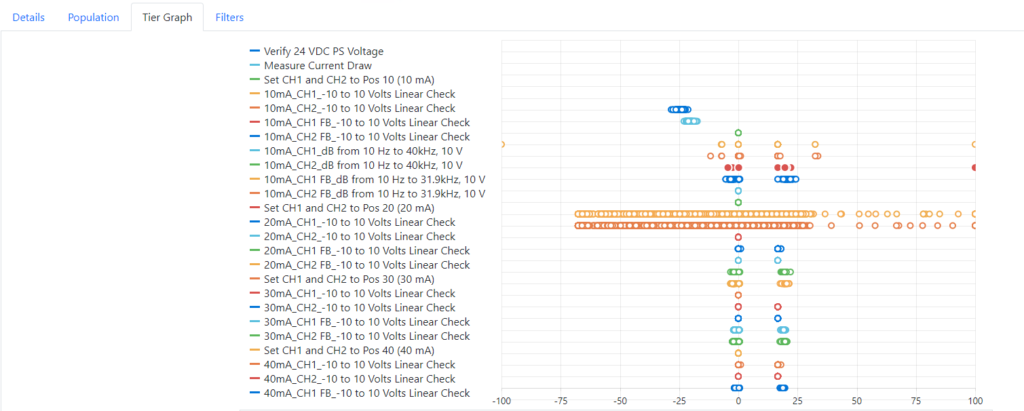

- Create graphs and charts to visualize results so that they’re easier to digest.

- Highlight a few of the most valuable aspects of the test in a summary at the beginning of the report. Executive summaries are important for the entire engineering team, not just executives.

Besides posting the report where people can easily access it, the best method to improve accessibility is to follow some rules on writing a good engineering report. Search the web for ‘make “engineering” reports more engaging’ and you’ll find many excellent suggestions, many from university departments that need to make their researchers better at promoting their work in order to increase funding – a goal that test engineers of all types can aspire to. A few examples:

- https://owl.purdue.edu/owl/subject_specific_writing/writing_in_engineering/writing_engineering_reports.html

- https://www.sussex.ac.uk/ei/internal/forstudents/engineeringdesign/studyguides/labwriting

- https://venngage.com/blog/consulting-report-template/ (this one mainly shows possible style improvements for readability)

Next Steps

Want help creating advanced test reports? Reach out for a chat.

In learning mode? Check these out: