Hardware Testing Process – How to test products during production

A typical hardware testing process

Before testing a piece of hardware, the test engineer should be clear on the purpose of the testing. For example, the specific testing details for a reliability test will be different than the details for a production test. This article discusses the many types of testing that can be applied to a piece of hardware.

- Create a test plan that lists the test cases used to check functionality against requirements

- Create a testing environment (e.g., measurement hardware, test software, cabling, fixtures, etc.)

- Place part into the condition needed for the measurement (apply pressure, voltage, temperature, etc.)

- Take some measurements

- Put those measurements through one or more pass/fail criteria

- Record the results as either summary data or verbose raw plus summary data

- Repeat 2-5 as needed to sweep through input conditions

- Create a final report document

- Declare the part as good or bad

- Repeat 2-8 for as many parts as need to be tested

Regardless of the purpose of the testing, each test passes through these series of general steps. Of course, the details of the steps will change depending on the type of test.

Hardware testing is an extremely big topic. Nevertheless, we’ll discuss some of the main test categories for both types of testing and types of hardware. We provide some important considerations while avoiding the detailed nuances of testing any particular product or sub-assembly. (If you work for a US-based company developing industrial products and want to discuss further, feel free to reach out for a chat).

Types of hardware testing

Test plan

A hardware part is built to satisfy certain requirements, which could be dimensional, operational, visual, or whatever.

A test plan is a collection of test cases, each of which is designed to verify that one or more requirements are met.

Each test case can be one-to-one with a specific requirement, or cover multiple requirements at once, or many test cases might be needed to verify one requirement.

Manufacturing quality teams are very interested in a test plan that provides complete coverage of requirements. This coverage is usually documented in a traceability matrix with requirements in columns (rows) and test cases in rows (columns) (see https://en.wikipedia.org/wiki/Traceability_matrix for an example).

Manufacturing test vs design validation

In this article, we’re focused on manufacturing test.

This testing occurs during production before the part leaves the factory or is rolled-up into an assembly. Also known as end-of-line test or final test.

Such testing is designated as functional testing, where the part is treated as a blackbox and tested to demonstrate that the part satisfies its requirements.

There’s another aspect of test not being discussed: design validation. The goal with design validation is understanding and analyzing the design limits of the product to better understand how it’s likely to perform in the real world and provide feedback on any areas of potential design optimization before the final revision of the design gets produced (see What should I keep in mind when I’m launching a new product and considering a custom product validation system? for more).

If you want to know more about the differences between testing for manufacturing and design, and field testing too, check out Hardware Product Testing Strategy – for complex or mission-critical parts & systems.

Reliability testing

Once a hardware design has been validated, meaning the design passes all the hardware performance requirements over an extended operational range, the hardware still needs to demonstrate its durability to repeated and/or long-term use. Reliability testing is used to either verify that the expected life of the product will be met, say according to design requirements or customer expectations, or to determine the lifespan of the design, so any warranty coverage can be aligned thus avoiding excessive product returns by the customers.

Reliability testing typically subjects several units of the hardware to repeated actions and/or cyclic conditions until each unit fails. For example, a batch of aerospace actuators could be subject to 10s of 1000s of extend/retract cycles under various loads and temperature conditions until they fail.

From these initial units, a failure analysis is performed, the design is tweaked, and reliability testing is performed on this updated design. This process repeats until the design meets robustness criteria. Reliability growth testing and associated statistical analysis monitors the improvement of that robustness.

Functional testing

When a functional test is performed, measurements are made to see if the part satisfies the part’s requirements. Examples of functional tests would check to verify that:

- Length is within tolerance,

- Part moves fast enough

- Part produces the right amount of amperage.

- RF transmitter has an expected amount of power.

- SPI drivers create good signals.

- Pump produces the correct pressure levels.

- Microcontroller reports the proper CRC for the firmware

These types of tests are often called blackbox testing to signify that the part can’t be “opened” to see how it works. In other words, the part construction has been completed and testing its subcomponents can only be done indirectly through testing its function.

It’s worth noting a couple things about functional testing because the term can be a little fuzzy.

Sometimes functional testing is called end-of-line or manufacturing test. And, in the software testing world, testing a part (e.g., an object class) that’s a subassembly of a bigger part (e.g., an application) can be described as unit testing. In this manufacturing-centric article, functional testing is meant to describe the testing of a physical device which offers no way to connect to things inside it and so functional testing can only be done by available access points outside the device, hence the related term “black box testing”.

Also note that, since products are constructed from other parts (i.e., subassemblies), each subassembly will have its own functional testing procedure. So, at various stages in the manufacturing of a part, you have access to signals and measurements not available at the “end of the line” when the finished goods are boxed up for shipment to the customer,

These incremental peeks into the inner workings of subassemblies at various stages of production affect how the functional tests at subsequent assembly stages are performed. Without these intermediate peeks, the functional test at the end of the line might contain many more and complicated test steps to infer the internal workings.

For example, suppose the product contains a motor mounted and tested at assembly station 3, where the motor windings are tested to look for shorts. Then, while the final test at station 11 can’t access the motor directly, it can verify that the pressure produced by the pump meets requirements. Now the motor has been designed to deliver the necessary pressure even with a small short so you can’t know that the short exists by looking only at the pressure at station 11. However, coupled with the test at station 3, you can be assured that the motor has not been damaged or installed in some unexpected manner between stations 3 and 11. Otherwise, you would need to run one or more additional tests at station 11 to infer the presence of a motor short, such as checking vibration levels due to the imbalance produced by the short.

Functional testing is often under pressure to be fast (so production rates aren’t reduced), and cost nothing, since if the product was built right to start, testing wouldn’t be necessary.

On the other hand, design validation testing is done once during design, and hence under (somewhat?) less pressure.

The move toward multiple “mini-tests” during the entire set of assembly steps can improve the efficiency and reduce the costs (time and money) of functional testing.

Software testing

As parts increasingly contain microcontrollers, software has become ingrained into the part’s operation. Perhaps the part uses software to control temperature or change the center frequency of an RF tuner, whereas in decades past a mechanical device, such as a bi-metal thermostat or a manually adjusted potentiometer, would handle these functions.

When performing functional testing on a part that uses software, the specific testing method still aims at checking the part’s function against requirements, but the details on how that testing happens will be different than for the decade’s old design. Specifically, the test cases will still cover the requirements as before, but the details of each test case will activate and/or measure the function differently.

Functional testing doesn’t test the software, but rather its function. Software functional testing specific to testing the software’s requirements is done prior to the product being tested during manufacture.

Software testing during development makes heavy use of unit testing, where each function and/or module has a set of unit tests that verify expected operation of those respective pieces. This type of testing is labeled “white box” since it makes use of being able to see the code “inside” the application and/or module.

Like the functional testing of the part, functional testing of the software is a black-box test, where the only access to the software is through the functions exposed to the user. Thus, when functionally testing the software, the software in the part’s microcontroller is exercised to assure:

- it’s sending expected responses to specific commands,

- any configuration data is correct (including per-part sensor calibration information),

- and the checksum of the code on the controller is correct for the previously validated and verified code base.

One useful difference between hardware and software functional testing is the ability in software to change the code running on the microcontroller during functional testing. Sometimes, a special “test firmware” is downloaded as part of the functional testing to give the tester access to different sections of the device (e.g., ROM, RAM, internal sensors, …) than the ‘end-user firmware” would.

Certification testing

There are also various forms of certification testing (e.g., CE, FCC, UL, etc.). This testing is generally performed by certified labs dedicated to this type of testing.

How to determine what needs to be tested

Deciding what’s important to test in production is usually driven by one of two ways:

- Intimate domain knowledge of the product being tested

- Required industry standards

You’ll obviously want to test any functionality related to safety or mission-critical functionality. Beyond that though, a lot of determining what to test has a lot to do with performance and accuracy. Which aspects of your product are most likely to fail based on variations in the production process?

Some common components/sub-assemblies to test include:

For electronic parts:

- power supply voltages and currents,

- signal levels and frequencies at various test points,

- range of operation to check linearity and accuracy,

- and so on.

For mechanical parts:

- dimensional tolerances,

- range of motion (i.e., speed, distance),

- forces,

- temperatures,

- power draw and output for efficiency measurements,

- flow rates,

- and on and on, because there are so many types of mechanical parts.

For optical parts:

- mechanical tolerances,

- power input and output,

- transmission and reflection properties as a function of wavelength,

- and so on.

For communications parts:

- bandwidths,

- transmission power,

- receive power,

- bit-error-rates,

- distortion,

- and so on.

And the list goes on because humans make a lot of different things.

Poll – Vote to see how your peers voted!

How to test your product

At a high level, you need to figure out what makes sense to automate and what makes sense to have a human manually test. And, note that a test can be a combination of automated and manual, not just only automated or only manual.

Things that lend themselves well to automation are generally those that satisfy the following 3 criteria:

- don’t require complex external connections or mounting to make the product run (e.g., several multi-pin connectors and harnesses, hydraulic fittings, mounting in jigs and frames),

- can be described in a simple algorithm so a computer can be programmed to execute the steps,

- and very repetitive tasks that would bore a human.

Things that are often better suited to manual testing are the opposite and, more importantly, should be done whenever the cost of automation is more than the cost of performing manually (don’t forget to include the wasted cost of erroneous testing done by tired humans).

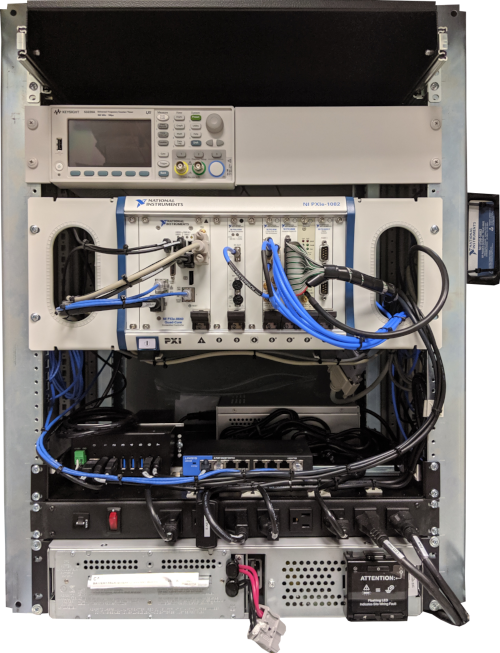

At a more detailed level, you’ll need to figure out what signal conditioning, data acquisition, UI, reporting, and so on, you’ll need to create a test system. See this Custom Test System Buyers Guide for more detail.

Next Steps

Here are some other articles you may be interested in. If you work for a US-based manufacturing company and want to discuss your testing needs, you can reach out here.

- Automated Hardware Testing

- Product Testing Methods for industrial hardware products

- Test Automation best practices

- 9 Considerations before you outsource your custom test equipment development

- Hardware Product Testing Strategy – for complex or mission-critical parts & systems

- How to improve a manual testing process