Communicating between Parallel Loops

There are lots of ways to move data between loops in LabVIEW and to send commands along with the data to tell the receiver what to do with those data. Here are two methods, one tried-and-true and one which I bet you didn’t know

Background

Queued Message Handler

A tried-and-true architecture to communicate between loops is the Queued Message Handler (QMH). The QMH is a combination of a producer event handler, which pushes user messages onto a queue, and a consumer with a state machine embedded in the consumer loop, such that the consumer loop can push its own messages onto the queue.

Network Streams

Another mechanism introduced in LabVIEW 2010, but still yet unknown by many developers, is the Network Stream. This communication mechanism behaves like a queue but has TCP/IP network scope, allowing data to be passed to parallel loops even on different machines.

QMH Overview

The QMH is well-documented on the NI website. Here is a good overview for a situation where the producer event handler manages user interface interactions: https://decibel.ni.com/content/docs/DOC-14169. In the example there, the queue data type is an enum indicating the message to handle. Here’s a code snippet.

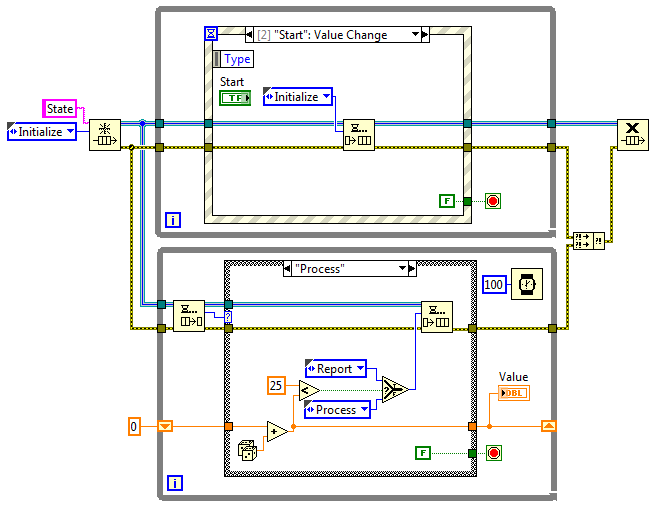

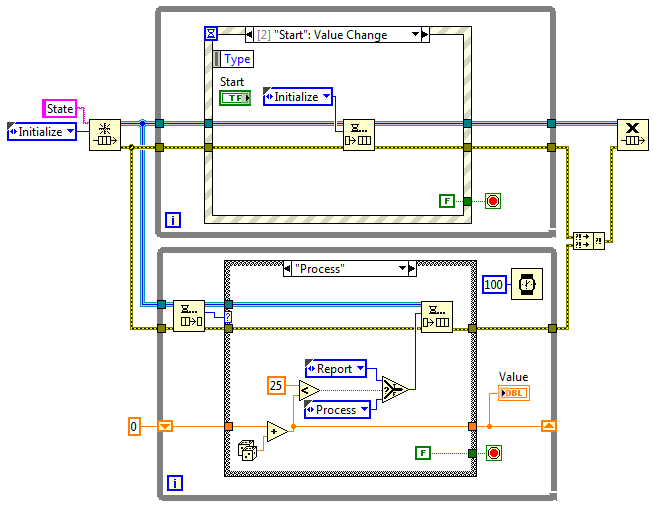

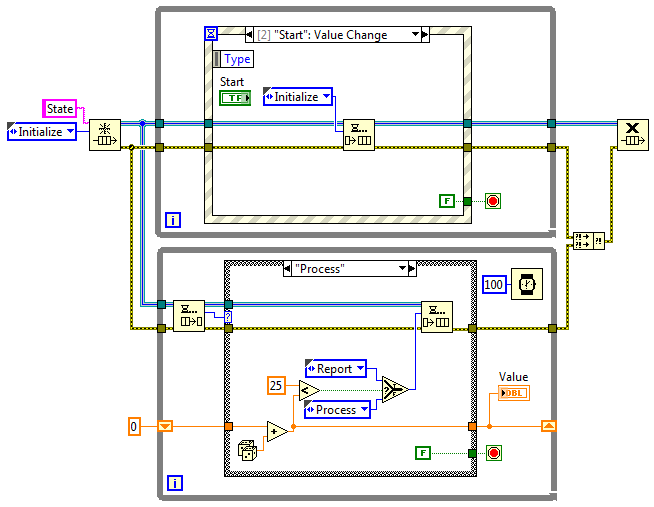

Figure 1 – QSM Example

In this example, pressing the Start button on the UI causes the ‘Initialize’ state to enter the queue whereupon it is received by the consumer loop. The ‘Initialize’ frame of the consumer loop cases pushes a ‘Process’ state onto the queue. The ‘Process’ state causes a random number to be summed iteratively (into a while loop shift register), while the ‘Process’ state is pushed onto the queue until the total exceeds 25. At that point, the ‘Report’ state is pushed onto the queue and the VI reports the result. Since nothing else is pushed on the queue, the consumer loop awaits further instruction, which must come from the producer loop.

An obvious benefit of this architecture is the responsiveness of the UI while the app is busy performing some task. Some earlier implementations of a QSM were done with a single loop (i.e., producer and consumer in one loop), but this implementation may become unresponsive to the user.

Better Queue Element

All you readers should know by now that it is better to create a queue data type that holds both the message and the data upon which to act or to describe the message payload more precisely. The element holding the data is often a Variant, allowing (almost) any type of data to be passed in the queue.

Non-Local Queue and Event Usage

There is no reason that queue usage needs to be confined to this producer and consumer loop. Since a queue can be named, access to a queue can happen anywhere in any VI. Thus, other parallel loops can communicate with this consumer loop by pushing messages on the queue. For example, a background data acquisition or safety monitoring loop can push sampled data or alarm info onto this queue for handling at this “main” application level.

Likewise, user events can be created to cause the consumer loop to response to events other than the press of a button on a UI. An alarm handler might be best suited for monitoring an event created when the application detects a problem. For example, an alarm event would cause the producer event case to analyze the alarm, determine the best course of action, and push the appropriate messages onto the queue for handling in the consumer loop.

Changing Thought Patterns?

It is interesting to see how far the adoption of good programming practices has come in the last several years. Take a look at the discussion in this forum: http://forums.ni.com/t5/LabVIEW/please-don-t-do-it/m-p/381751?view=by_date_ascending. Note how some developers think the use of a QSM is overkill for situations where sequential flow (i.e., stacked sequence frames) is sufficient. Granted that they may be an acceptable statement for a simple app, but an app hardly ever stays simple.

Network Streams Overview

Network streams were introduced as part of LabVIEW in version 2010. They use Ethernet to communicate between a single writer and an associated reader. They provide lossless data transfer and are robust to connection interruptions. As such, they are essentially queues that operate across the Ethernet.

Basic Properties

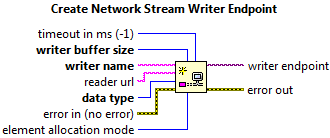

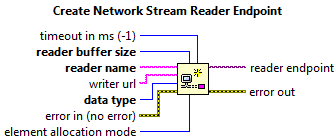

Network Streams define a connection between a single writer and a single reader. Such a point-to-point connection implies that data transfer is in one direction. To establish a stream, you create a writer with the ‘Create Network Stream Writer Endpoint’ VI and a reader with the ‘Create Network Stream Reader Endpoint’ VI, as shown in Figures 2 & 3.

Network Streams natively support many LabVIEW data types, similar to Network Shared Variables. Data are flattened to be compatible across different versions of the LabVIEW runtime engine.

An application might use multiple Network Streams. A typical arrangement is to have a Network Stream that moves data between machine A and machine B and another Network Stream that goes the reverse direction (B to A).

A Network Stream is defined with an element of some data type and a buffer size to hold a specified number of those elements. If during writing the buffer becomes full, the writer will hold off pushing more data into the buffer until the reader removes one or more elements, just like a typical LabVIEW queue.

Figure 2 – Create the writer

Figure 3 – Create the reader

Example

Here’s a simple example that creates a Network Stream on a host to listen to data created by a target.

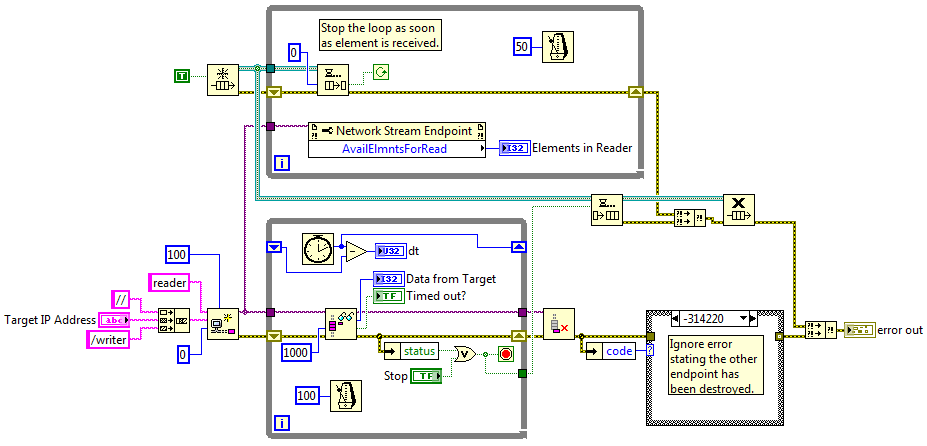

The Host block diagram is below.

Figure 4 – Host block diagram

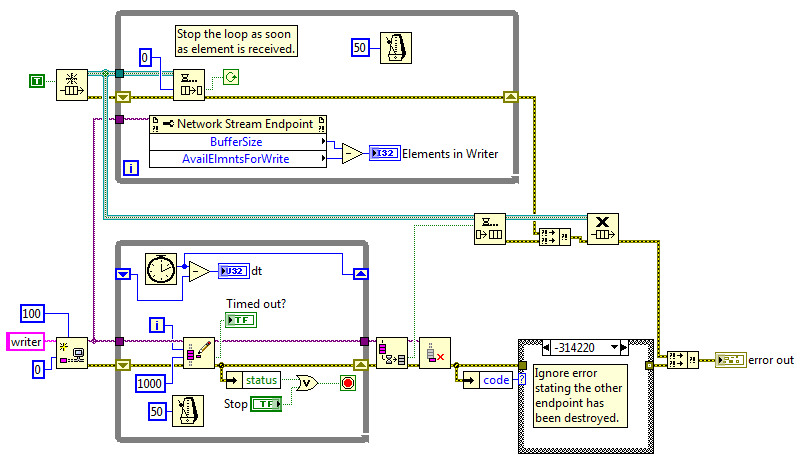

The Target block diagram is next.

Figure 5 – Target block diagram

Note how the writer (in the target) has a loop time of 50 ms, whereas the reader (on the host) has a loop time of 100 ms. The writer will fill the 100 element buffer defined in the Network Stream reader faster than the reader can consume it. Eventually, the writer will be held off, to prevent overflowing the buffer, and the ‘dt’ value in the writer loop will change from near 50 ms to near 100 ms.

When the stop button is pressed on either the host or target side, the Network Stream is destroyed, causing the other side to detect an error and the associated loop is also stopped. The error code is checked to ignore an error due to closure of the other endpoint. Notice how a normal queue is used to stop the upper parallel loop by pushing a flag into the queue just prior to deleting the Network Stream, so that the dequeue function in the upper loop returns a ‘F’ for the time out flag.

Specifying the Endpoint

It is nice that only one side of the Network Stream needs to specify the IP address of the other side. This feature makes it useful for creating a system with a batch of cRIOs or sbRIOs (xRIOs) all sending data to a host. The host needs to know the IP addresses of all the xRIOs (and the names of the writers) so it can create a bunch of readers, but the xRIOs don’t need to know the IP address of the host. This feature makes deployment to multiple xRIOs simpler. You can also create Network Streams for multiple applications on the same machine.

The writer and reader creation VIs just sit still waiting for the other to engage so that it does not matter which is started first.

Data Types

Some data will not transfer across a Network Stream, such as a reference to an object. A reference on the source machine will very likely make no sense on the destination machine, so references are not allowed.

Because Network Streams have been optimized for high data throughput, passing small amounts of data, such as a command, may have significant latency. If responsiveness is needed, the stream will need to be flushed to force the data transfer in a timely manner.

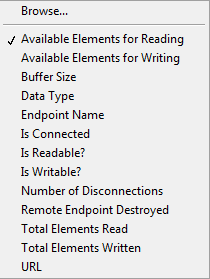

Properties

You can see how well the reader is consuming elements on the writer side, so you can adjust the burden on the reader by slowing down or speeding up the writer. Many other Network Stream Endpoint properties are available as shown in the snippet from the pull down menu.

If a Network Stream is destroyed on either the writer or reader side, an error is created on the other side when you try to access the Network Stream. This tight integration across parallel loops (or processes) is useful for managing the two processes.

More info

Check out https://www.ni.com/en-us/innovations/white-papers/10/lossless-communication-with-network-streams–components–archite.html for more details. This white paper contains good tips for maximizing performance (speed and memory), some performance graphs comparing raw TCP with Network Streams, and some information about minimizing latency.

Combining Network Streams with QSM

Now you can implement a QSM between a host PC (running the UI) and a real-time target (such as a cRIO) doing the actual work! The same data type used in the normal queue holding the message and associated Variant data can be passed though the Network Stream.

Distributed architectures are made manageable by using proper development techniques from the beginning.

Summary and Next Steps

Using parallel loops to operate sections of an application is a wonderful mechanism to distribute functionality into manageable parts. As soon as you realize the power of these designs, you realize that good data handling mechanisms are needed. An old architecture and a new tool are presented to demonstrate that a flexible but well-used architecture can be enhanced quickly with new capabilities.

- If you’re looking for more useful automated test info, check out our resources page.

- If you work for a US-based manufacturing company and have a quick question about your LabVIEW-based automated test system, feel free to reach out and ask an expert to see if we’ve got a quick answer.

If you’re deep into learning mode, check out these resources:

- LabVIEW Test Automation – Custom Automated Test System Buyers Guide

- How to deal with LabVIEW Spaghetti Code

- Which NI Platform is Right for Your Automated Test Needs? cRIO, PXI, cDAQ, sbRIO?

- Product Testing Methods – for industrial hardware products

- Test Automation Best Practices – for automated hardware testing

- An Argument against the Universal Test System

- 6 Ways to do Remote Monitoring with LabVIEW

- Commissioning Custom Test Equipment

- Hardware Test Automation Tools – for complex electromechanical product manufacturing

- Hardware Product Testing Strategy – for complex or mission-critical parts & systems

- 9 Considerations Before you Outsource your Custom Test Equipment Development

- 5 Keys to Upgrading Obsolete Manufacturing Test Systems

- LabVIEW FAQ